Sometime in 1st week of July 2009, a couple of School time Friends residing in different cities were interacting with each other and feeling the pain of not able to meet each other since 25 years of leaving the School. They were also feeling the itch of lost contacts of most of the Friends. I had passed out of the School in 1984, when there were no mobile phones and even Landline phones were scarce. So, we lost contacts.

The news of 25th July get-together, started spreading like wildfire. The Mini-Get-Together sparked a Nuclear reaction. Information about newly found Old Friends started pouring in everyday. I started maintaining a spreadsheet file with contact details. So, everyone started informing me about finding of new, old friend. Then in turn I called back the newly discovered School time friend (be it of any Batch), noted the complete contact details and made entry to the Spreadsheet file. This made me central point of this endeavor, though effort of everyone was equal. By this time we started calling our Group as "Baraunians". We also coined a slogan of the Group, "Be Baraunian forever". I started circulating the Spreadsheet in morning and in evening with new entries through email to everyone in the list. But, as the list became longer, the emailing software started refusing to send mail to so many people in one go. So, I created a group ID on GoogleGroups as Baraunians@googleGroups.com on 30th July 2009. Further, the circulated Spreadsheet was difficult to refer by people as they were not able to collate through the latest version. Most of the time people called me to know the contact details of someone or the other. To overcome this problem I uploaded the spreadsheet to Google Docs (now known as Google Drive) and embedded it on Google Sites. People started referring to the Google Site website configured onto a subdomain of my then blog domain as baraunians.techds.in. But, in September 2009, I registered Baraunians.com and relaunched the Group's website. The relaunched website www.Baraunians.com was welcomed by the existing members and became super hit. We received calls from many people who were desperately searching their Friends and found our Website through Google-Search.

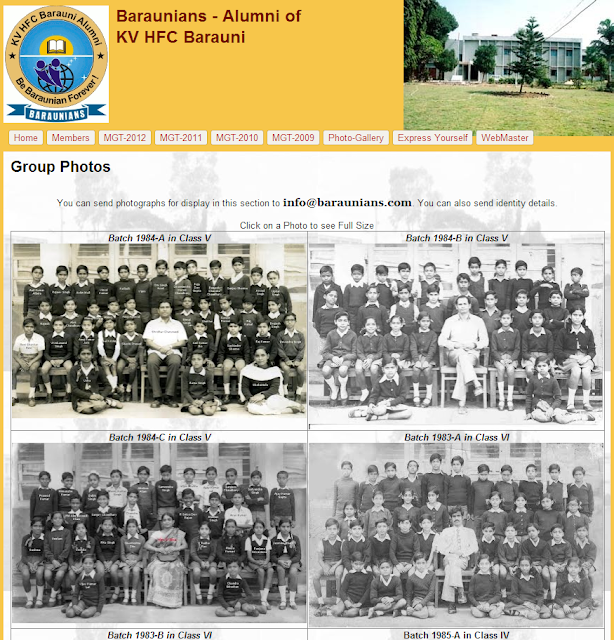

In the meantime, people started sending photographs from their personal collections and were posted in an organized manner embedding them via Picasa on the Website. Whosoever visited the Site was bound to become nostalgic. People also started sharing stories from their childhood & School days and those were duly posted in appropriate sections of the Website. Parents too started reconnecting to their long lost colleagues. You would like to visit the website at http://www.Baraunians.com.

Immediately after the 25th July Get-Together, we all started discussing need of a Mega-Get-Together. And people started aligning towards the proposal of 3rd week of December 2009. We all started discussing a mutually convenient date and zeroed upon 26th December 2009. For venue, we discussed about several suggestions and even visited many of them to understand the costing.

It was also decided that we will invite our erstwhile School Teachers and will reimburse all travel expenses. The stay arrangements for outstation Baraunians and Teachers was booked at Ginger Hotel (Taj Group) near New Delhi Railway station.

Needless to say that the MGT-2009 reunion was a mega success, where people from all parts of the World participated. Even the Photographers and Videographers were spellbound to see such an emotional gathering. They later told us that it was an unbelievable event. You may read the complete narration of the MGT-2009 event and the nostalgic reactions by some of the attendees.

Thereafter, we had MGT on 25th Dec 2010 in Barauni (at our School Campus), on 24th Dec 2011 in Varanasi and on 24th Dec 2012 in Ranchi. The details may be found on Baraunians Website. Baraunians also started organizing Mini-Get-Togethers in different Cities.

There were four Catalyst elements behind the burgeoning success of the Baraunians Group.

They all were saying lets get together. But, the desire was not getting materialized due to one or the other reason. I was in Ghaziabad (Delhi-NCR region), Rajesh Chandra Prasad was in Rajkot (Gujrat), Rajiv Ranjan Dwivedi was in Varanasi, Rajeev Kumar Singh was in Vasundhara (Ghaziabad) and Anil Kumar Jha in Noida.

All of a sudden, one fine morning, Rajesh called me and said, I am just coming to Delhi on 25th July, call other friends and lets meet. Called up Rajiv Ranjan at Varanasi and he immediately agreed. Anil Kumar Jha and Rajeev Kumar Singh too agreed immediately. As we anticipated a small gathering, I offered that the venue of get-together to be my residence in Rajendra Nagar, Ghaziabad. We informed a couple of more friends about the get-together. Now, the surprising chain reaction started. Nidhi Chaturvedi (Sharma) and Bimal Kumar were most instrumental in giving more oxygen to the reaction. Everyone started informing every other person, he/she was in touch and passing on the contact details to each other and also informing about the get-together of 25th July 2009. Finally, the get together happened on 25th July 2009 with extraordinary emotions and warmth. The weather conditions was not very supportive (it was hot and humid) and aggravated by power cut. People from different batches gathered at my residence at around 08:00 pm. Many people later on regretted that they did not join. It was a real extraordinary cheerful gathering as most of people were seeing each other after almost 25 years.

|

| Get-together of Baraunians on 25th July 2009 |

About our common legacy and the School: I and all my friends referred in the above description had studied in a School called "Kendriya Vidyalaya" situated in the township, Urvarak Nagar, HFC (Hindustan Fertilizer Corporation Ltd), Barauni, Bihar, India. The School is popularly known as KV HFC Barauni. Barauni is an Industrial belt. Children of Employees of HFC, IOC (Indian Oil Corporation Ltd), Thermal Power Plant and nearby localities used to study in the School. I and most of my Batchmates had joined the School in 1972 in 1st standard when the School was established. I passed out 12th standard in 1984 and joined Allahabad University. My visits to Barauni got limited to once a year. My Father took voluntary retirement in 1988 and went back to Ghazipur to look after our ancestral Farmland. Then I never visited Barauni back. The Fertilizer Factory of HFC Barauni was closed by the Government of India owing to huge losses and the Urvarak Nagar Colony became deserted. All the Employees and their families having close community bonding got lost and disconnected. The KVS (Kendriya Vidyalaya Sangathan) too decided to close the School in April 2003. Although the Students and Parents from nearby areas revolted, but nothing happened. Later, the Urvarak Nagar Colony was handed over to Armed Forces SSB (Sashastra Seema Bal) to operate its Training Centre. This made the Colony once again habitated, and the School reopened. Then came an over enthusiastic Politician Ram Vilas Paswan (then Chemicals and fertiliser minister), who mooted a non-feasible idea of reopening the Fertilizer Factory, based on Natural Gas, with no Natural Gas available in near vicinity. He also, gave marching orders to SSB to vacate the premises. The Colony again became deserted, and KVS tries to close the School at the beginning of each session year. We all Ex Students of the KV HFC Barauni are emotionally attached to the School and we would never ever like to see our Alma Mater to get closed like this.

|

| The KV HFC Barauni, School Building and Playground in 1988 |

The news of 25th July get-together, started spreading like wildfire. The Mini-Get-Together sparked a Nuclear reaction. Information about newly found Old Friends started pouring in everyday. I started maintaining a spreadsheet file with contact details. So, everyone started informing me about finding of new, old friend. Then in turn I called back the newly discovered School time friend (be it of any Batch), noted the complete contact details and made entry to the Spreadsheet file. This made me central point of this endeavor, though effort of everyone was equal. By this time we started calling our Group as "Baraunians". We also coined a slogan of the Group, "Be Baraunian forever". I started circulating the Spreadsheet in morning and in evening with new entries through email to everyone in the list. But, as the list became longer, the emailing software started refusing to send mail to so many people in one go. So, I created a group ID on GoogleGroups as Baraunians@googleGroups.com on 30th July 2009. Further, the circulated Spreadsheet was difficult to refer by people as they were not able to collate through the latest version. Most of the time people called me to know the contact details of someone or the other. To overcome this problem I uploaded the spreadsheet to Google Docs (now known as Google Drive) and embedded it on Google Sites. People started referring to the Google Site website configured onto a subdomain of my then blog domain as baraunians.techds.in. But, in September 2009, I registered Baraunians.com and relaunched the Group's website. The relaunched website www.Baraunians.com was welcomed by the existing members and became super hit. We received calls from many people who were desperately searching their Friends and found our Website through Google-Search.

The Google's features that helped the Website creation:

- Google Sites

- Google Docs

- Google Forms

- Google Picasa Albums

- Google Groups

- Google Search

- Google Mail

|

| The www.Baraunians.com Website |

In the meantime, people started sending photographs from their personal collections and were posted in an organized manner embedding them via Picasa on the Website. Whosoever visited the Site was bound to become nostalgic. People also started sharing stories from their childhood & School days and those were duly posted in appropriate sections of the Website. Parents too started reconnecting to their long lost colleagues. You would like to visit the website at http://www.Baraunians.com.

Immediately after the 25th July Get-Together, we all started discussing need of a Mega-Get-Together. And people started aligning towards the proposal of 3rd week of December 2009. We all started discussing a mutually convenient date and zeroed upon 26th December 2009. For venue, we discussed about several suggestions and even visited many of them to understand the costing.

On 16th October 2009, we finalized the Centaur Hotel, Delhi (situated near IGI Airport) for the Mega-Get-Together (MGT). We discussed a lot about contribution amount and mode of collection. We were not able to organize a Bank Account for the Group in such a short span of time (due to the involved formalities). Rajiv Ranjan Dwivedi, came forward and offered his Bank account which he was not operating to be utilized to deposit Contributions. Contributions started pouring in via Cheque and Cash to the account. The details of contributions received were transparently posted on our Website.

Thereafter, we had several meetings to finalize the finer details of the Mega-Get-Together.

|

| Planning Meeting at Fortune Hotel, Noida |

|

| Planning Meeting at Centaur Hotel, Delhi |

It was also decided that we will invite our erstwhile School Teachers and will reimburse all travel expenses. The stay arrangements for outstation Baraunians and Teachers was booked at Ginger Hotel (Taj Group) near New Delhi Railway station.

Needless to say that the MGT-2009 reunion was a mega success, where people from all parts of the World participated. Even the Photographers and Videographers were spellbound to see such an emotional gathering. They later told us that it was an unbelievable event. You may read the complete narration of the MGT-2009 event and the nostalgic reactions by some of the attendees.

Thereafter, we had MGT on 25th Dec 2010 in Barauni (at our School Campus), on 24th Dec 2011 in Varanasi and on 24th Dec 2012 in Ranchi. The details may be found on Baraunians Website. Baraunians also started organizing Mini-Get-Togethers in different Cities.

There were four Catalyst elements behind the burgeoning success of the Baraunians Group.

- Latent desire of every Baraunian to get re-united.

- Forceful visit of Rajesh Chandra Prasad from Rajkot to Delhi on 25th July 2009.

- Alok Mall's statement to me saying that because we had organized a Mini-Get-Together in July, the fizz of the mega desire of grand meet will die down. I took it as a challenge.

- Joining of Umesh Sharma of Batch-1980 to the Group on 13th Sep 2009. Prior to his joining we were rudderless. He gave great guidance to the Group with his able Leadership and Organizing Power.

The day, it deviates from the primary Goal, I will have to rethink on the existence of the Group-Mail and the Website www.Baraunians.com.